Well not entirely, and if it is, it’s unlikely intentional.

More so, a misguided attempt by a growing bureaucracy to protect people and enable greater economic and social prosperity. Or maybe just a classic example of the nanny state.

I’ll admit that headline was meant to get those GDPR pundits and supporters all riled up. Clickbait if you will.

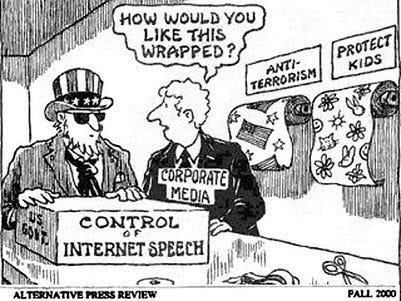

To the point, the regulations that became enforceable back in May and led to that overwhelming of your inbox are possibly a paradigm shift for consumer empowerment. However, they give concessions to the most core violators of our privacy and freedom. Governments and security and intelligence agencies and the collaborations between them, if they can be deemed as seperate. (I’ll leave that for another post). This is where our rights are most undermined. We are guilty until proven innocent. Any justification to revoke these rights and civil liberties is wrapped up as a way to protect us, or “the children”.

Whether this be protecting people from a boogeyman that happens to be politically convenient at the time, to those pesky terrorists or the corporates in their ruthless pursuit of profit and growth at the expense of our privacy. It usually follows the same narrative.

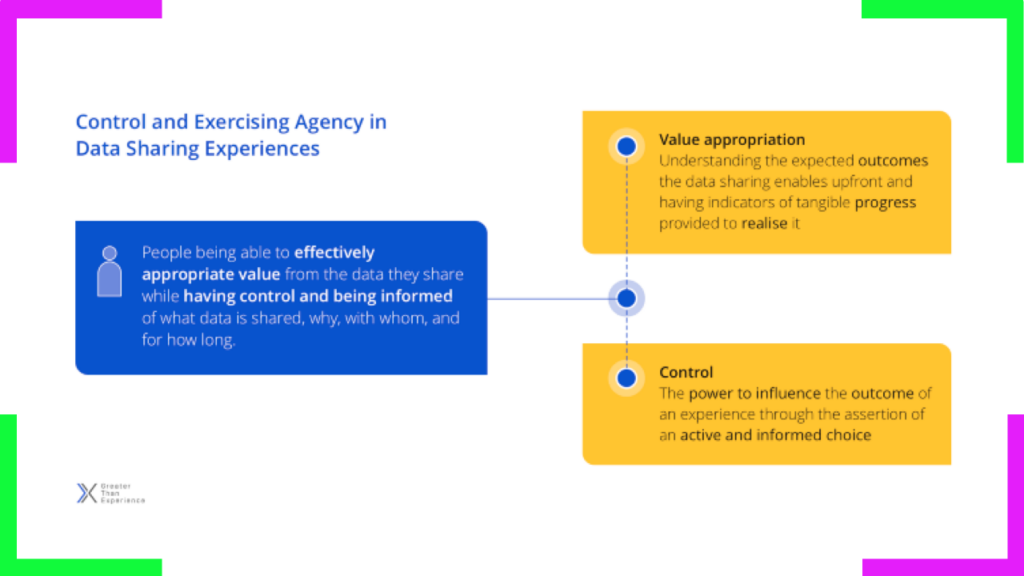

Don’t get me wrong, I support what can be perceived as the core tenets of GDPR like protecting people’s privacy and unlocking the power and value of personal data for the benefit of the people the data relates to, and all of humanity. I know that empowering people with control and ownership over their personal data will facilitate a transformation of markets and business model innovation. We will see greater prosperity when the swathes of data we generate can be harnessed. Big and small data, working in harmony. Macro and micro insights to support individual and collective wellbeing. These things are pivotal to the evolution of our civilisation and in this rapidly advancing technological age protecting the sovereignty of our digital selves is paramount.

What I aim to highlight in this post is one of the most inherent contradictions in the GDPR. That although there are protections in place to limit corporate surveillance, state-based surveillance will go unimpeded. I’ll also point out some possible unintended consequences and indicate where and what we should be looking to as the regulation catalyses a change in attitudes, behaviours, markets and business models.

GDPR as a catalyst for change

Although there are many good intentions that underpin GDPR’s evolution, it will only end-up empowering people as “consumers”. No doubt that a persons right to privacy is crucial. It is second to the primary and most fundamental right we can have as a human, self-sovereignty. In our real, analogue lives we have this, as free people. However as citizens on the ship of the collectively imagined fiction that is the state, we do not. The state, apparently in pursuit of representing the collective will and interest of the people, does not enable this right. I wont get into this philosophical quagmire in this post, I’ll leave that for another at a later date. To the point, in this digital world we are rapidly creating, self sovereignty is even more important. Our digital self is fractured. Divided up to be traded. We are, through our data as units or bundles of attributes, a commodity. Simulated on a server to be targeted with experiments. Ones that are continually optimising for incremental changes in perception and behaviour.

The GDPR will go some way in addressing this. Shifting the balance of power between individuals as consumers (Data Subjects) and businesses (Controllers and Processors) as vendors.

We may end up witnessing a Cambrian explosion of new business models. Trust will be emphasised and transparency over how people’s data is processed will ensue. People being able to exchange data for value and be a primary beneficiary. Organisations will have an incentive to adopt things like Privacy-by-Design and perceptions and practices towards handling of personally identifiable information will change. This is all possible and there are signs this is already happening.

But what have the regulations set out to achieve?

Let’s take it from the horses mouth.

“The twofold aim of the Regulation is to enhance data protection rights of individuals and to improve business opportunities by facilitating the free flow of personal data in the digital single market.” — Council of the European Union

Let’s unpack this a bit.

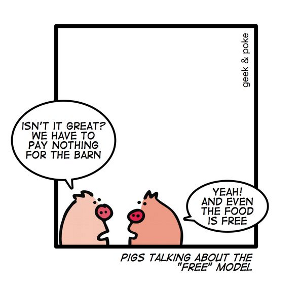

Data Protection Rights

The regulations aim to enhance the protection of people’s data and maybe in some half-baked sense, their right to privacy. A worthy pursuit. Our privacy has been partly disregarded in the pursuit of corporate profit and the desire to have a 360 degree view of the customer. The rapacious harvesting of peoples data has been a boon for the tech sector, particularly for companies like facebook and Google. Where “free” services result in people and their data being used for those imperceptible changes in behaviour. The product is behaviour manipulation to the highest bidder.

Then there are the data brokers, credit reporting agencies, SaaS marketing and advertising platforms, “Growth” marketeers, all wanting a slice of the PII. It has been a very lucrative run for businesses using this model of monetisation.

A term for this has been gaining more momentum, coined surveillance capitalism. It stems from an article in the Monthly Review published in 2014 and is now commonly used in conversations and as a hashtag on social media platforms like twitter.

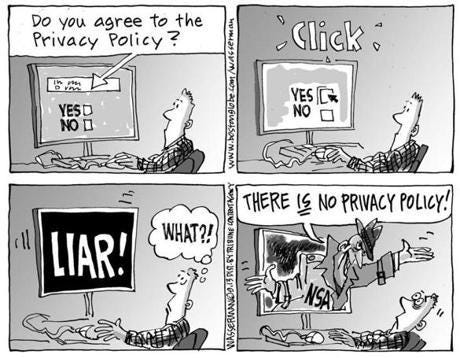

This monetising of data generated by people passively and actively, and surveilled throughout their digital life has been the norm. Permission to process people’s data has been hidden deep in legalese heavy privacy policies and terms of use, reliant on the all too easy “I agree” button or a tick of a box.

Convenience trumps privacy after all, doesn’t it?

Other times they are blanket permissions through people’s devices or through a browser extension. Consent is implied through continuing to the next screen, or sometimes attained through the use of dark patterns. Those design patterns in user interfaces that trick us intentionally to give away our data, and our power.

The whole web has evolved with a business model that is predicated on this unethical capture of peoples data. Trackers and cookies, sensors on our phones and in our streets, devices in our “smart” homes listening into our conversations, even our children’s toys have become surveillance devices. The siphoning is so pervasive something had to be done. Thus, the bureaucrats, technocrats, consultants and companies came to the rescue with GDPR. Now, rules are in place to ensure consent is upfront, must be freely given, be opted-into and that individuals are given clear view of the data to be processed, where it is stored and for what purpose. Overall this is positive. It brings limits to the power of the surveillance capitalists. Data protection win. Privacy not so much.

A regulated freer market

This digital single market sounds great. Reduce the friction on trade in the digital economy and enhance prosperity for all citizens and members of the Union. Inclined observers would know that the free flow of data is crucial to modern life and markets. It is like the oil that lubricates the machinery of modern digital life. If it ceases to flow sufficiently our modern life and the markets that facilitate it will seize up as the lubricant diminishes in supply.

These bottlenecks in the supply chain need to be removed.

The World Economic Forum published a report in 2011, Personal Data: The Emergence of a New Asset Class. The major finding was that:

“to unlock the full potential of personal data, a balanced ecosystem with increased trust between individuals, government and the private sector is necessary.”

Stakeholders in the EU realised the huge opportunity. The value that can be derived from a freer flow of this new asset class will enable economic and social prosperity. But with trust at all time lows something needs to be done for this vision of a digital single market to be truly realised.

Legitimate interests

While consumers are given the opportunity to consent to the processing of their personal data they are also being forced into zero-sum experiences with the “legitimate processing”. In this context, if data is being processed on the basis of legal obligation, the individual has no right to erasure, right to data portability, or right to object. Take it or leave it. Like some new 5th law of thermodynamics. Immovable, permanent and not able to be challenged.

Art. 23 in relation to restrictions indicates individuals have rights, matters of “National Security” amongst many other reasons, and ones that relate to the interest of the “Union” notwithstanding. Ultimately we have rights, except in those cases where we don’t.

On the one hand, people (or maybe it is just our data?) are being given some protections from the intrusive surveillance and harvesting that is core to the established business models of the internet age 2.0. Then on flip-side, the mass surveillance conducted by the state will continue unimpeded, it is necessary to fight the bad guys. Be alert, but not alarmed. And really, if you have nothing to hide, then you have nothing to fear.

We protect you

The GDPR is ultimately consumer-centric and empowering consumers is a great initiative. These regulations will facilitate innovation and bring a more equitable share of value, and we are already seeing legislative changes in other jurisdictions. A tilting of the balance of power to the consumer gets a tick for sure. To do this we have a monolithic institution wielding the stick of economic violence. The fines of 20 million Euros, or 4% of global revenue are significant. We will witness the application of these penalties to some naughty corporates which will go a long way in funding the bureaucratic machine sufficiently. At least to fund the enforcement efforts. Fines and punishment are what we as a society expect of the State to deliver. To punish us when we are bad little serfs, or to protect us from the evils of the private sector, bad actors, terrorist (“freedom fighters”)and tyranny…oh wait, not that last part.

One thing this does achieve is it further entrenches the perception that the State is there to protect people. But will mainly succeed in making people more dependant on this institution for protection. A double edged sword and a sort of stockholm syndrome.

A big win for the legitimacy of the State.

The intentions on the surface may seem benign. But while the EU brought in this supposed set of protections for the consumer citizenry, member states continued on the rampage to get more of data, knowledge and power. Whether this be the metadata retention laws or the inherently surveillance based KYC/AML/CTF regulations and anti-terror laws, the siphoning of data and monitoring of people will continue. At about six terabytes a second.

The fears of terrorism, identity fraud and just general bad actors are real and to the majority of the citizenry fed news by the agenda setting MSM, frighteningly real.

What is not wanted is an empowered and free people. Able to come together as communities, collaborate and solve problems without being tracked and monitored. And coming soon to a theatre (surveillance state) near you, precrime convictions.

Ticking the box

Just as individuals have been accustomed to ticking the boxes to get to that ecommerce purchase on a shiny new gadget, so will be compliance for most organisations. After all it’s just a cost burden on a business and will rarely lead to innovation or value creation. Some companies are using the regulations as an opportunity to innovate and create shared value with their customers. But this is hard. For companies with a culture of true innovation and those that practice privacy-by-design it is a natural transition, if requiring much at all. These organisations are few and far between.

Most organisations will comply and those that don’t will fund the growth of the bureaucratic state. Businesses could end up so overburdened by the compliance costs in general that they will possibly end up insolvent. Some will just be acquired by larger companies who have managed to maintain compliance or just not been caught out yet. Of course there is a whole burgeoning industry called RegTech that is set to be the hero of that story, for the businesses that can afford it anyway. Compliance-by-design.

“we have inspectors of inspectors and people making instruments for inspectors to inspect inspectors.” — Buckminster Fuller

Consent fatigue and behaviour change

By now many of you reading this would have experienced the bombardment of emails and consent notices and the poor and misguided attempt at re-consent. Businesses had sufficient time to prepare and design experiences that were delightful and compliant, or reframe the nature of the requirements and innovate. Seize the opportunity. But most did not.

It was like a student cramming studies before a major assignment was due and then delivering a lack lustre assignment. Poorly thought through and lazily delivered.

The need for freely given and unambiguous consent when there is no other legal basis for processing has been a catalyst for innovation. Along with this, the record of this contractual agreement needs to be timestamped and provided to all parties. For this you can see the interesting work that has been done through the Kantara Initiative with Consent Receipts.

A plethora of products and services to manage consent through dashboards or Personal Information Management Systems are sprouting up, some of which have been around for almost a decade. For the ones that have been around the longest, GDPR is a vindication of arguments they have been making for years.

I’m very skeptical as to whether this will work. The ingrained habit to skip over the detail and press the “I agree” button or “check the box” is likely to continue. For better or worse, convenience trumps privacy for most. We are (mostly) utilitarian. Although privacy is important to people, they want to get to the outcome, whether this be to read a news article or have that shiny gadget paid for and on its way to their door. They don’t want to read something written in a language they don’t understand, particularly when it is 50+ pages long. Which begs the question, if someone does not understand the terms of a contractual arrangement, is it void anyway? Seems there is an assumption that a reasonable person is going to just understand their contractual obligations. Capacity for contract may one day be put into question with some case law established but I’m not counting on it. In our current social paradigm this may see like a moot point, law is law, whether you understand it or not.

Besides, who wants to be managing all these decisions at every interaction with an organisation?

This is of concern to many practitioners in this space who are working hard to solve this set of challenging design problems.

Until we have machine learning based personal assistants to manage our privacy preferences we will be overloaded. In some instances privacy dashboards and Personal Information Management Systems may augment our preferences. For the most part these assistants will only be accessible to those who have the digital literacy required or the money to pay for the services. For the rest, again, ticking the box will do. Never mind the data used to train this, ah, machine.

On the horizon

The cat is out of the bag and we will just have to iterate from here. In support of pragmatism and incremental progress, and in the pursuit of privacy and digital self sovereignty I am overall very positive towards initiatives like GDPR. Maybe similar legislation will follow in other jurisdictions, although I am not hopeful it will matter all that much in bringing real privacy and control to people over what they choose to share and what they do not. The regulations seem to me to be coming at a time where people are realising the power they have and the tools increasingly at their disposal. Like a second renaissance and reinvigoration of principles espoused in the Cluetrain Manifesto.

This cumulative awareness was in part instigated by the revelations from Edward Snowden and more recently emphasised with data breaches such as that from Equifax and the Cambridge Analytica and Facebook shenanigans. An awakening to the value of our personal data, not just in a monetary and directly economic sense, but in social and cultural sense. This will only give more credence to the projects that are building new systems and networks.

Solutions like Project SOLID which is being undertaken by Tim Berners-Lee with colleagues at MIT is just one of them. Others like that from Scottish firm Maidsafe, with their autonomous data and communications network SAFE (Secure Access For Everyone), has the lofty goal of enabling a web with privacy, security and freedom embedded into the design. These types of projects have, by default, us humans in control of our personal data.

There are a plethora of projects, companies, conferences and initiatives that move us in a direction towards a digital age envisioned by the early pioneers of the web and thought leaders on digital identity.

Although the below is not an exhaustive list it is indicative of what is out there:

- SAFE NETWORK

- Dat Project

- Holochain

- Electronic Frontier Foundation

- Blockstack

- Decentralized Identity Foundation

- Secure Scuttlebutt

- Meeco

- Digi.me

- MyData

- Project VRM

- Brave Browser

- Web3 Foundation

- Inrupt

- Project SOLID

There are plethora of others and you are welcome to contribute to a list I have started on GitHub

I would really like to see more inter-project and protocol collaboration.

Concepts like Self-Sovereign Identity are gaining momentum and a truely open ecosystem is on the horizon. New p2p models for social organisation and platforms that open up the possibilities are being discussed and debated. New tools are being designed and built. Some practical and pragmatic, others more explorative and utopian. We need both.

There are many challenges to be overcome across the human, business, legal and technical layers. Areas such as data interoperability, key management, personal data property rights, web standards and broader adoption of the tools out there combined with education on how to protect your privacy. Then there is the biggest barrier, political will, courage and true leadership. This capitulation from the authorities to empower people who they are actually paid to serve, is the biggest challenge. It touches on the most established institutions of our epoch. The ledger maintainers and record keepers, the social contract/construct that, apparently, binds us in collective harmony.

I cannot see into the future, I can only see possibilities. I am choosing to focus on ones where the current models are merely just a stepping stone to the liberation of our physical and digital selves. Encryption and privacy tools abound. Cypherpunks, crypto-anarchists, privacy activists and open source aficionados releasing tools free and open source for all to share and benefit from. No mass recognition needed. A pipe-dream to some, or maybe just a matter of connecting the dots and actively working towards it.

“If you want to teach people a new way of thinking, don’t bother trying to teach them. Instead, give them a tool, the use of which will lead to new ways of thinking.” ― Buckminster Fuller

Imagine if all the energy spent and resources devoted to GDPR and the business and system transformation needed for compliance were devoted to educating people? Even better, really empowering them through the designing and engineering of tools that have privacy and security by default. Much of the thousands of pages of legalese would be redundant.

Last quote I promise… I recall originally coming across it in Manuel Castells writings, usually seen on the web and sometimes attributed to an African proverb but usually paraphrased and used by a caucasian males like me.

“We don’t inherit the world from our parents we borrow it from our children”

I look forward to a world where future generations are not digital serfs. I’m sure many of you do too.

{m3}